My Story

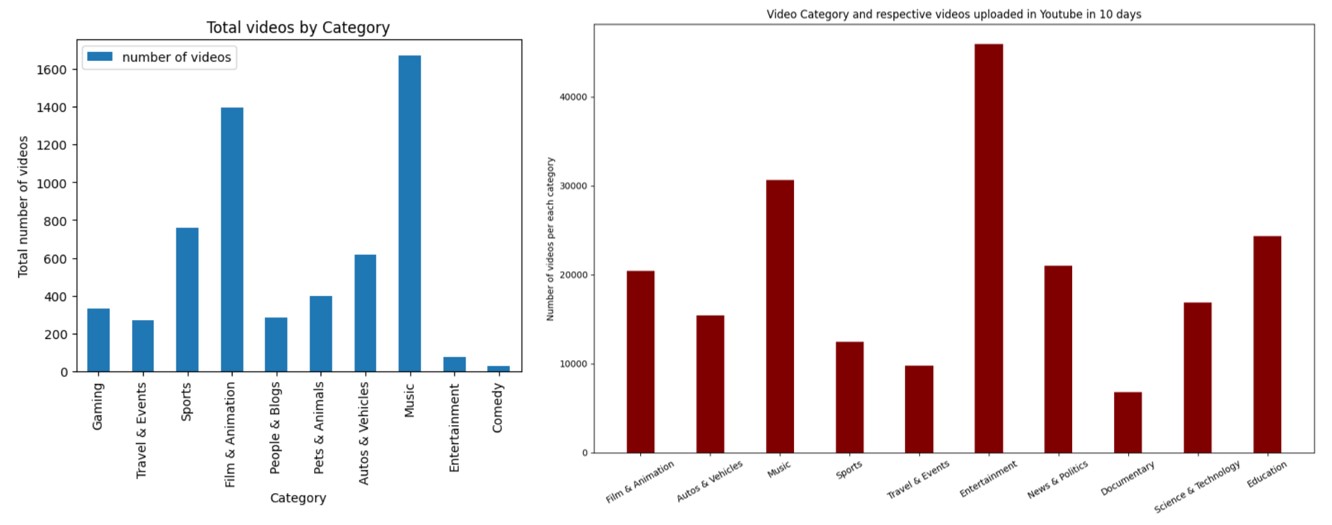

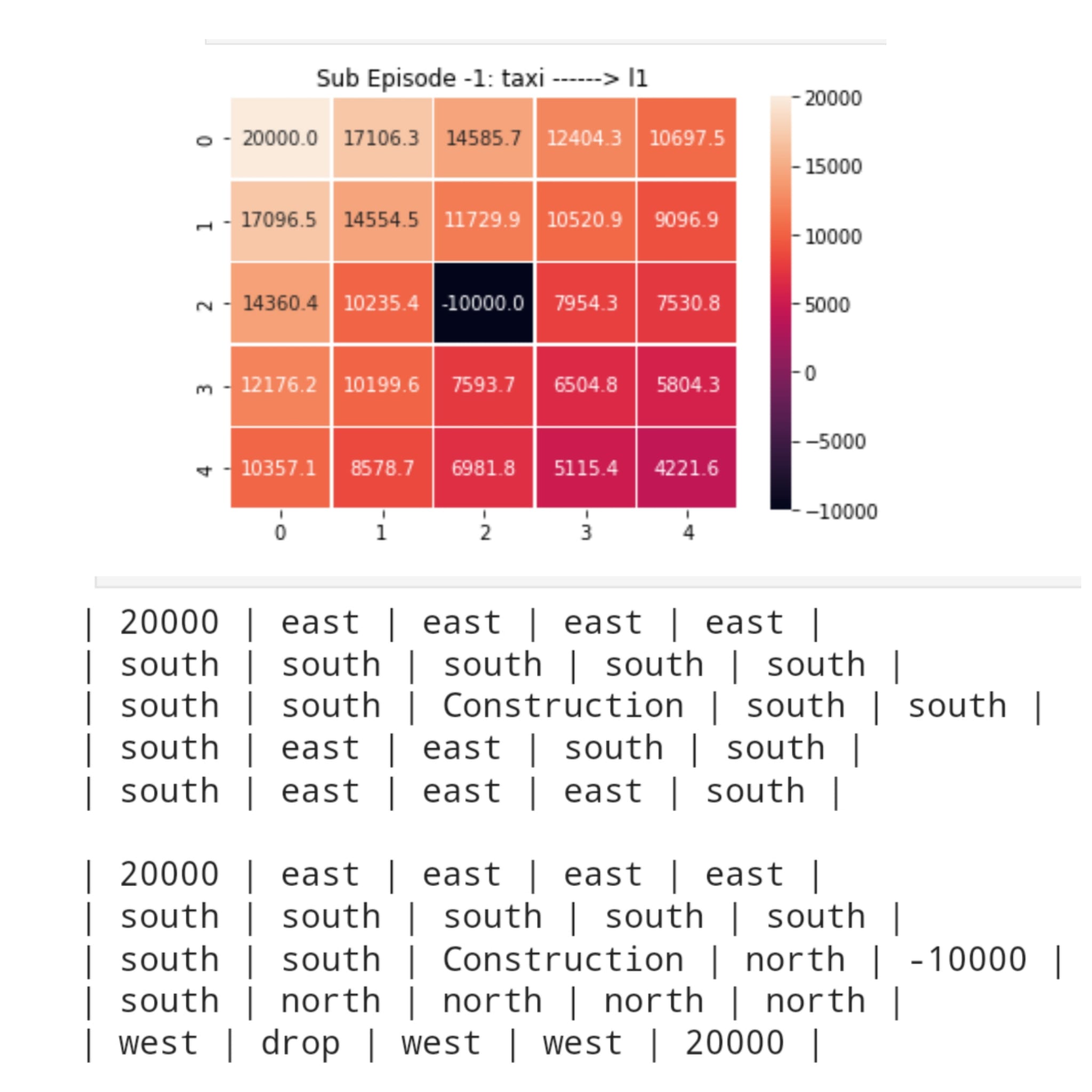

🎓 AI enthusiast with a Master’s in Artificial Intelligence and a deep passion for building intelligent systems for the real world. I’ve engineered Transformer-based models for radio map estimation 📡, built and deployed chatbots using ViT and custom NER pipelines, and published peer-reviewed research at IEEE. ☁️ Experienced in designing secure, scalable AI systems on AWS—hands-on with SageMaker, S3, IAM, and containerized MLOps workflows. I focus on resource-efficient models that run smoothly on CPUs—ideal for lightweight production-grade assistants 🤖💬. 📊 My work blends ML modeling, data cleaning, and pipeline automation—whether it's enhancing radio-map estimation with Deep Progressive Networks 🛰️ or building Kafka-powered YouTube analytics pipelines 📊. Always curious, always building! ⚙️ I thrive at the intersection of ML, cloud, and software engineering—always learning, always building. Let’s connect and geek out on AI, cloud, or anything in between! 🚀🔍

Languages

The languages i am proficient with and would always love to work with

Big Data Technologies

Data plays a critical role in AI, and having access to big data provides a significant advantage. I have utilized various technologies to effectively handle and analyze large datasets, allowing me to harness the power of data in my AI endeavors.

Deep Learning

Deep learning frameworks are truly works of art, empowering us to construct intricate models and effectively navigate the complexities of training and testing. Through my learning journey, I have gained proficiency in utilizing libraries that not only enhance the functionality of these frameworks but also provide valuable visualization capabilities and support.

Tools

My favorite tools for version control, code editing, and container orchestration.